Architecture

PaletteAI abstracts away the complexity of deploying AI and ML application stacks on Kubernetes. Built on proven orchestration technologies, PaletteAI enables data science teams to deploy and manage their own AI and ML application stacks while platform engineering teams maintain control over infrastructure, security, and more.

"Mural" is a Spectro Cloud project PaletteAI is built from. As a result, many PaletteAI components, such as the primary namespace (mural-system), refer to Mural. When you come across these references, know that Mural and PaletteAI are nearly synonymous.

Palette

PaletteAI is built on top of Palette and leverages Palette's strengths to provide a seamless deployment and lifecycle management experience for data science teams.

Through Palette, platform engineering teams can configure bare metal devices to be used as available compute resources for PaletteAI. Palette addresses the different challenges encountered in constrained environments, such as Edge, airgap, and highly regulated environments, through the Palette VerteX edition. By delegating infrastructure provisioning and Day-2 management to Palette, platform engineering teams can focus their attention on the application stacks rather than the underlying infrastructure.

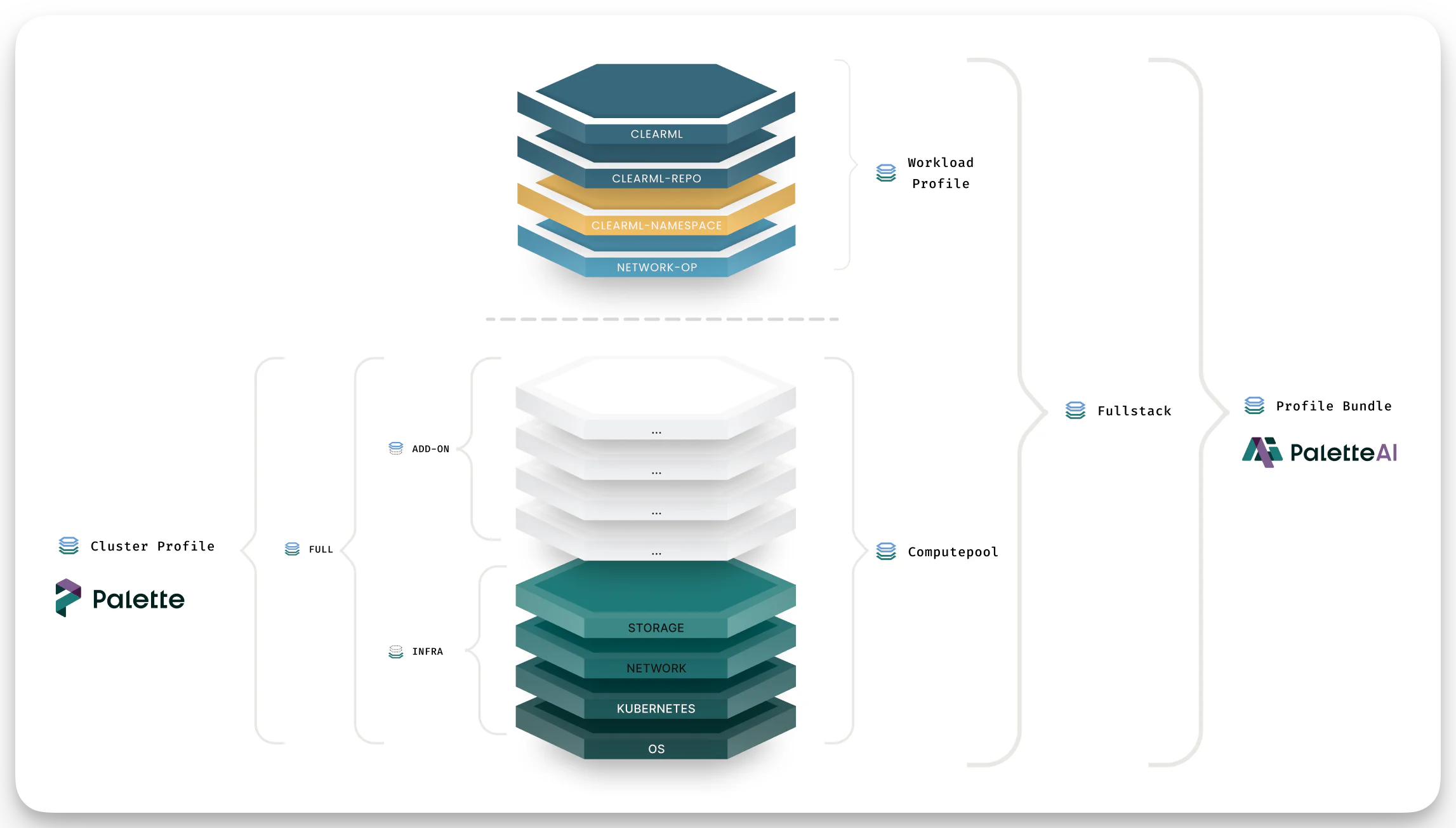

Profile Bundles

PaletteAI bundles Palette Cluster Profiles and PaletteAI Workload Profiles into Profile Bundles, allowing platform teams to fine-tune cluster configurations into reusable infrastructure and application stacks for data scientists. Platform engineers can configure different versions of Profile Bundles and roll out updates to clusters as needed.

Refer to our Profile Bundles guide to learn more about how Palette and PaletteAI use profiles to deploy clusters and applications.

Device Discovery

PaletteAI discovers devices or machines through the Compute resource, which uses Palette credentials from the Settings resource to query available hardware. Information such as the device type, network interface, CPU, memory, GPU, GPU memory, and more is collected to help data science teams customize the AI/ML application stack. If Palette is unable to gather information about a device, platform engineers can manually assign a set of key tags to help PaletteAI identify the device correctly. Refer to our Machine Discovery guide to learn more about tagging compute resources.

Deployment Architecture

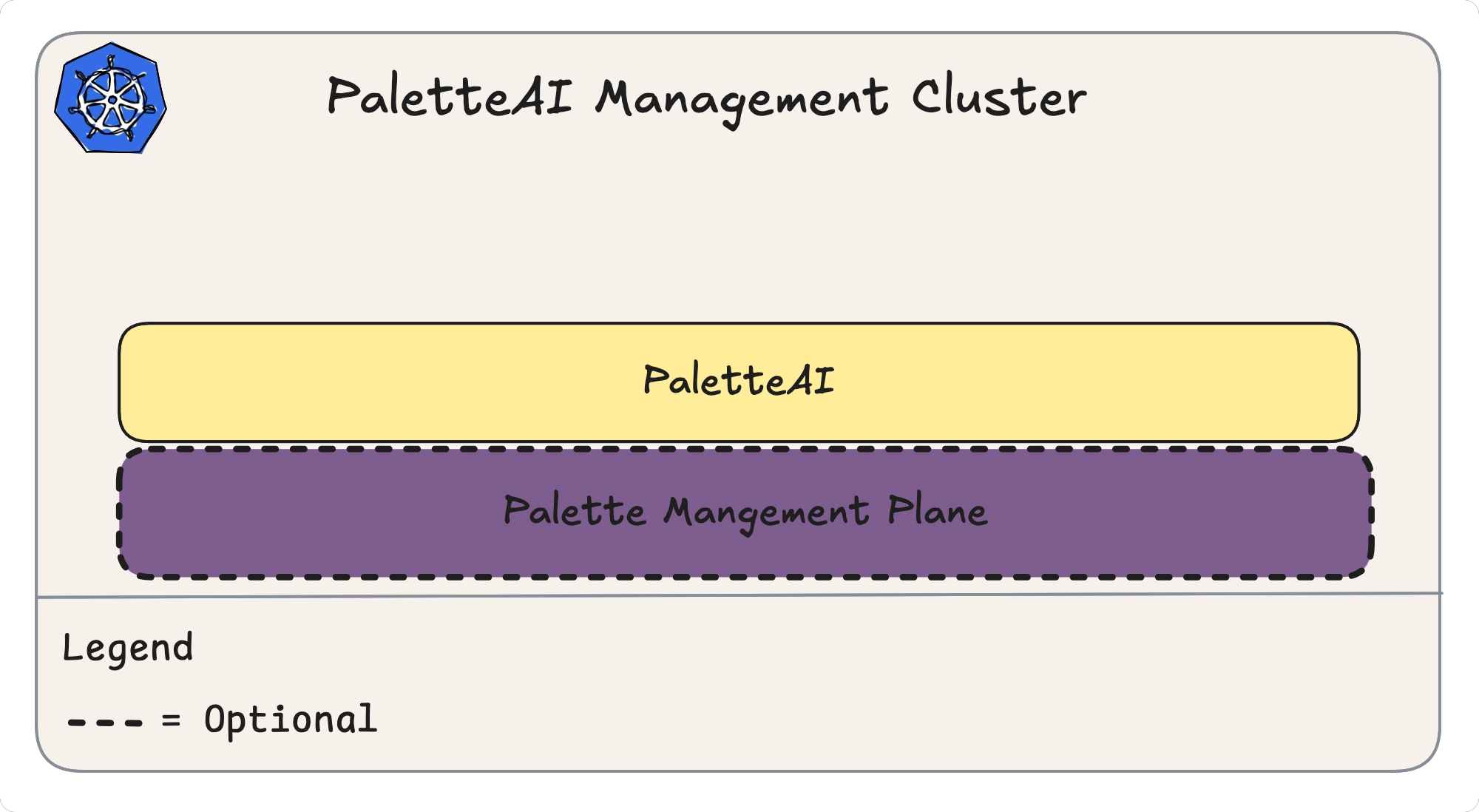

PaletteAI uses a hub-spoke architecture where a central control plane orchestrates workload deployments across multiple Kubernetes clusters.

The hub cluster runs the PaletteAI control plane components and can be installed on any Kubernetes cluster, including alongside self-hosted Palette or independently.

Meanwhile, spoke clusters (also known as Compute Pools) are where AI/ML applications actually run. Spoke clusters are deployed through Palette.

Refer to Hub and Spoke Model for an in-depth dive into PaletteAI's hub-spoke architecture and how applications are deployed on spoke clusters.

OCI Registries

PaletteAI leverages Kubernetes Custom Resource Definitions (CRDs) to power the overall functionality of PaletteAI. This allows for programmatic support for PaletteAI through the use of the Kubernetes API. These resources are versioned, stored, and managed as YAML files using an OCI registry, such as Zot, and applied to your PaletteAI instance via kubectl commands or GitOps workflows.

Refer to OCI Registries for more information.

Security

PaletteAI implements enterprise security through authentication, authorization, and multi-tenancy controls.

Dex integrates with existing identity providers (SAML, OIDC, LDAP) and issues tokens trusted by the hub cluster's Kubernetes API server. Identity provider (IdP) groups map to role-based access control (RBAC) roles that control access to PaletteAI resources, with credentials stored server-side only.

Multi-tenancy is enforced through namespace isolation per Project, with RBAC policies at both Tenant and Project levels and GPU quotas to prevent resource exhaustion. Hub-spoke communication uses Open Cluster Management (OCM) with mutual TLS; Palette credentials are managed in Kubernetes Secrets, and Flux pulls artifacts from trusted OCI registries. All internal communication uses HTTPS with cert-manager-issued certificates.

Refer to Security to learn more about how PaletteAI is designed with a security-first principle.