MLPlatform

An MLPlatform is a resource that represents a Machine Learning (ML) platform, application stack, or framework. It can also be an Artificial Intelligence (AI) platform, application stack, or framework. You can think of the MLPlatform as an application stack at the end of the day. An MLPlatform can be deployed to a dedicated environment or a shared environment. The MLPlatform is the primary PaletteAI resource that downstream consumers, such as data scientists and business users, interact with. The MLPlatform exposes a URL, if the underlying application stack supports it, which can be used to access the application stack.

The PaletteAI User Interface (UI) provides a visual interface for data scientists and business users to deploy and access MLPlatforms. The UI is optimized for the data scientist and business user personas to help them deploy and access MLPlatforms quickly and with minimal friction. However, for more advanced use cases, you can deploy and manage MLPlatforms through the MLPlatform Custom Resource Definition (CRD). Check out the MLPlatform to view all available fields and configuration options.

Deployment Modes

An MLPlatform can be deployed to a dedicated environment or a shared environment. The following sections will provide more details on each deployment mode.

Dedicated Environment

A dedicated environment is a Mural environment dedicated to the deployment of a single MLplatform. No other MLPlatforms will be allowed to be deployed to the dedicated environment. The MLPlatform is isolated from other environments. When a dedicated environment is used, PaletteAI deploys a dedicated Kubernetes cluster for the MLPlatform, utilizing available compute resources that meet the requirements requested by data scientists, ML engineers, or other business users. This mode is only available if the Palette integration is configured and the compute resources are available.

What is a Mural environment?

A Mural environment is an abstraction that dictates where a workload should be deployed. In this context, a workload is an MLPlatform. The environment is built on top of Open Cluster Management (OCM) Placements. The Placement ensures the workload gets federated to the correct Kubernetes cluster. In a dedicated environment, there is only one Kubernetes cluster in the environment, as the environment is dedicated to the deployment of a single MLPlatform. This isolation is achieved by leveraging a dedicated placement policy that selects only one Kubernetes cluster with the proper labels. In a shared environment, multiple Kubernetes clusters coexist, as the environment is shared among multiple MLplatforms. We encourage you to check out the Environments page to learn more about Mural environments.

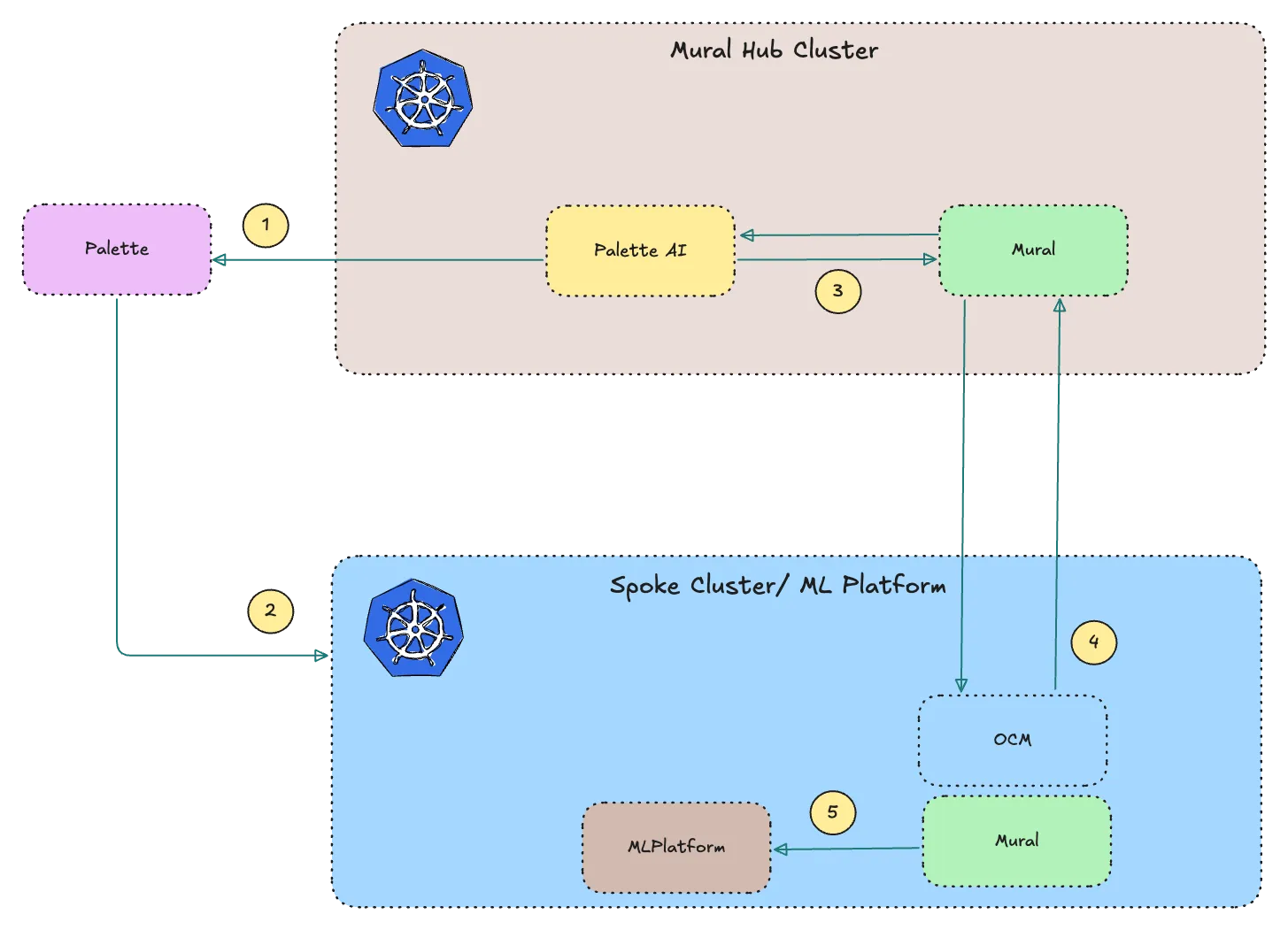

The following diagram illustrates the order of events when a dedicated environment is used.

- PaletteAI gathers the available compute resources and requests Palette to create a dedicated Kubernetes cluster.

- Palette creates a Kubernetes cluster.

- PaletteAI registers the dedicated Kubernetes cluster with Mural as a new spoke.

- The Mural hub installs OCM and Mural on the dedicated Kubernetes cluster to facilitate the deployment of the MLPlatform.

- Mural deploys the MLPlatform to the dedicated Kubernetes cluster.

GPU Limits

If the project Compute Profile contains specific GPU limits, the MLPlatform will respect the GPU limits. For example, if the project Compute Profile contains a GPU limit of 4 for the NVIDIA A100 GPU family, and the MLPlatform requests 6 NVIDIA A100 GPUs, the MLPlatform will be rejected.

apiVersion: palette.ai/v1alpha1

kind: ComputeProfile

metadata:

name: edge-compute-profile

namespace: project-a

spec:

gpuLimits:

'NVIDIA-A100': 4

Delete Policy

Dedicated MLPlatforms support a delete policy. The delete policy dictates what happens to the Kubernetes cluster when the MLPlatform is deleted. The delete policy can be configured when creating or updating the MLPlatform. The following are the supported delete policies:

- Delete: The Kubernetes cluster will be deleted when the MLPlatform is deleted. This is the default delete policy.

- Orphan: The Kubernetes cluster will be retained when the MLPlatform is deleted.

The default delete policy is Delete.

Shared Environment

A shared environment is a Kubernetes cluster that is shared by multiple MLPlatforms. Teams can use shared environments to maximize the utilization of the compute resources available to them. When a data scientist, ML engineer, or other business user wants to deploy an MLPlatform, they have two options for shared mode deployment:

- Deploy to an existing shared environment - Select a pre-existing shared cluster that already hosts other MLPlatforms

- Create a new shared cluster - Provision a new Kubernetes cluster that will be configured for shared use by multiple MLPlatforms

To create a new shared cluster, a Palette integration must be configured, and the compute resources must be available. Otherwise, only existing shared environments will be available.

Shared Environment Usage

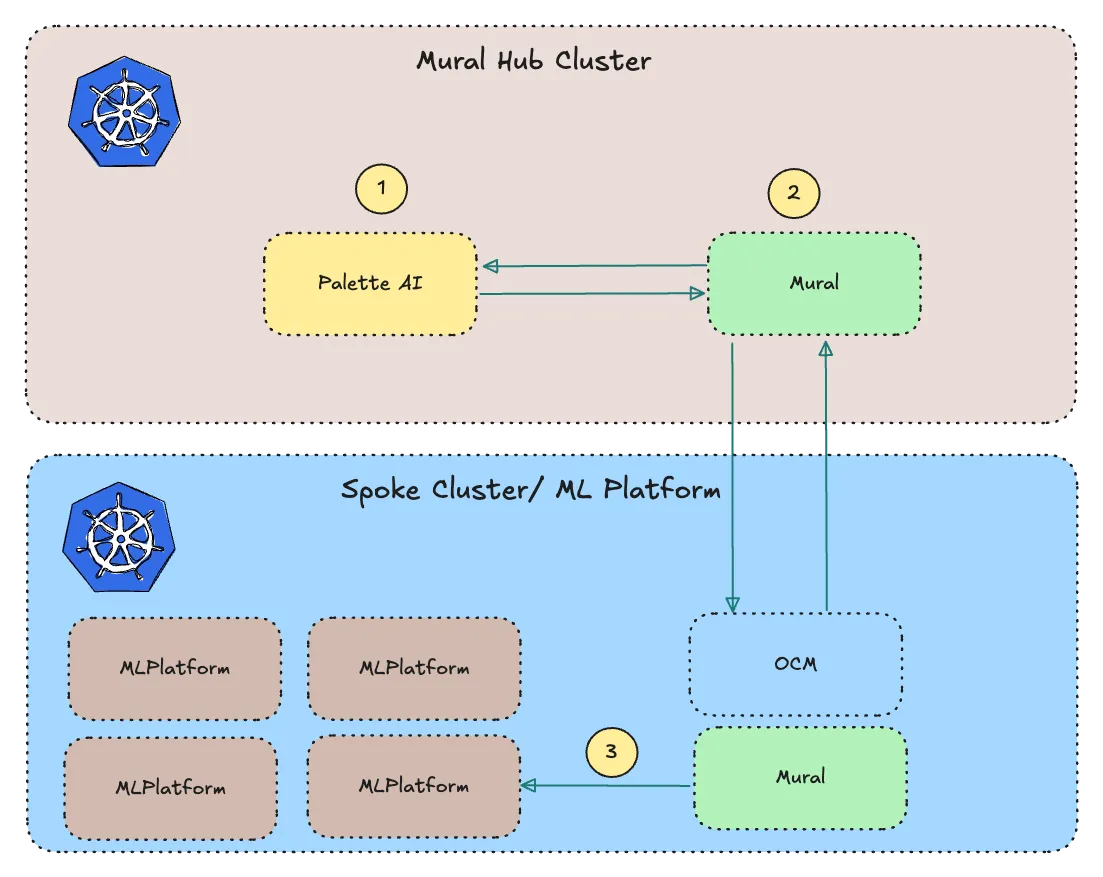

The following diagram illustrates the sequence of events when deploying an MLPlatform to an existing shared environment.

- PaletteAI submits a request to Mural to deploy a new MLPlatform to a shared environment.

- Mural prepares the MLPlatform for deployment by selecting the appropriate Kubernetes cluster and namespace.

- The MLPlatform is installed in the shared environment.

New Shared Cluster Deployment

When creating a new shared cluster, the deployment process is similar to dedicated mode but with shared configuration:

- PaletteAI gathers the available compute resources and requests Palette to create a new Kubernetes cluster.

- Palette creates a Kubernetes cluster configured for shared use.

- PaletteAI registers the new Kubernetes cluster with Mural as a shared spoke with the

palette.ai/mode: "shared"label. - The Mural hub installs OCM and Mural on the new Kubernetes cluster to facilitate shared MLPlatform deployments.

- Mural deploys the MLPlatform to the new shared cluster.

Shared Cluster Lifecycle

When deploying a new shared cluster (not using an existing shared environment), the shared Kubernetes cluster will always be retained when the MLPlatform is deleted. This ensures that other MLPlatforms can continue using the shared infrastructure without disruption.

Shared environments are Mural environments behind the scenes.

Components of a MLPlatform

An MLPlatform is comprised of two main components, a Palette Cluster Profile and a Mural WorkloadProfile. These two components are what form a complete MLPlatform stack.

Depending on the deployment mode, a MLPlatform either requires a Palette Cluster Profile and a WorkloadProfile, or only a WorkloadProfile. The Cluster Profile is what contains the core infrastructure software that is needed for deploying a Kubernetes cluster, such as the Operating System, Kubernetes, Container Network Interface (CNI), and Container Storage Interface (CSI). The WorkloadProfile is what contains the non-core infrastructure software that is needed for deploying an MLPlatform. The machine learning frameworks and applications are considered non-core infrastructure software and, therefore, are not part of the Cluster Profile and are placed in the WorkloadProfile.

To better understand the relationship between the Cluster Profile and the WorkloadProfile, the following diagram illustrates the relationship between the two using a fictional MLPlatform. You can think of the example as a MLPlatform for deploying ClearML.

As stated earlier, not all MLPlatforms require a Palette cluster profile. A Cluster Profile is only required when a new cluster is created for the MLPlatform. This includes creating a new dedicated cluster or creating a new shared cluster through Palette. When using existing shared environments or Bring-Your-Own-Cluster scenarios, no Cluster Profile is required and only a Mural WorkloadProfile is needed.

The following table summarizes the different scenarios and the required components for each scenario.

| Scenario | Deployment Mode | Palette Cluster Profile | Mural WorkloadProfile |

|---|---|---|---|

| New Dedicated Cluster | Dedicated | Required | Required |

| New Shared Cluster | Shared | Required | Required |

| Existing Shared Environment | Shared | Not Applicable | Required |

| Bring-Your-Own-Cluster | Shared | Not Applicable | Required |

If a Palette cluster profile is required, it must be created and configured in Palette. If a Mural workload profile is required, it must be created and configured in Mural. We recommend you model each component in the respective platform's console for an optimal user experience.

Discovery

How does PaletteAI's UI discover the available Cluster Profiles and Workload Profiles? The short answer is through required tags. By using tags, PaletteAI UI's can dynamically find available Cluster Profiles and Workload Profiles. Otherwise, PaletteAI administrators would need to manually track and update the available Cluster Profiles and Workload Profiles.

Similar to the diagram above, the PaletteAI UI will present a complete stack that combines the cluster profile and workload profile to the data scientist, ML engineer, or business user who is deploying the MLPlatform. Unbeknownst to them, PaletteAI UI will automatically ensure that the correct Cluster Profile and Workload Profile are selected for the ML Platform deployment, eliminating the need for manual selection of these profiles. This helps reduce the friction of deploying an MLplatform for data scientists, ML engineers, or business users.

The MLPlatform custom resource definition, when consumed through a YAML, requires you to specify the Cluster Profile reference and WorkloadProfile reference. It's only the PaletteAI UI that dynamically discovers the available Cluster Profiles and Workload Profiles.

Cluster Profile

Cluster Profiles intended for PaletteAI usage require the following tag palette.ai to be present. The next tag must match the name of the MLPlatform. For example, if the MLPlatform is for ClearML, then the tag must be clearml to be discovered by PaletteAI.

The following is a list of tags that are required for a Cluster Profile to be discovered by PaletteAI. The following tags are supported for the Palette cluster profile.

clearmlrunai

Workload Profile

WorkloadProfiles must have the palette.ai: true tag to be discovered by PaletteAI. The next tag, palette.ai/platform must match the name of the MLPlatform and the version, palette.ai/plaform-version, of the MLPlatform. For example, if the MLPlatform is for ClearML, then the tag must be palette.ai/platform:clearml and palette.ai/platform-version:7.14.5 to be discovered by PaletteAI.

To summarize, the following tags are required for a Workload Profile to be discovered by PaletteAI.

palette.ai: truepalette.ai/platform: <mlplatform-name>palette.ai/platform-version: <mlplatform-version>

Below is a YAML example of a WorkloadProfile for ClearML with the required tags. The WorkloadProfile spec is omitted for brevity.

apiVersion: mural.sh/v1beta1

kind: WorkloadProfile

metadata:

name: clearml

namespace: mural-system

labels:

palette.ai: true

palette.ai/platform: clearml

palette.ai/platform-version: 7.14.5