Overview

PaletteAI abstracts away the complexity of deploying AI and ML application stacks on Kubernetes. PaletteAI is a self-service platform that allows data science teams to deploy and manage their own AI and ML application stacks, without the need for platform engineering teams to get involved. Platform engineering teams configure how and what infrastructure is provisioned through PaletteAI, as well as the defaults applied to the application stacks to ensure performance, cost control, security, compliance and more.

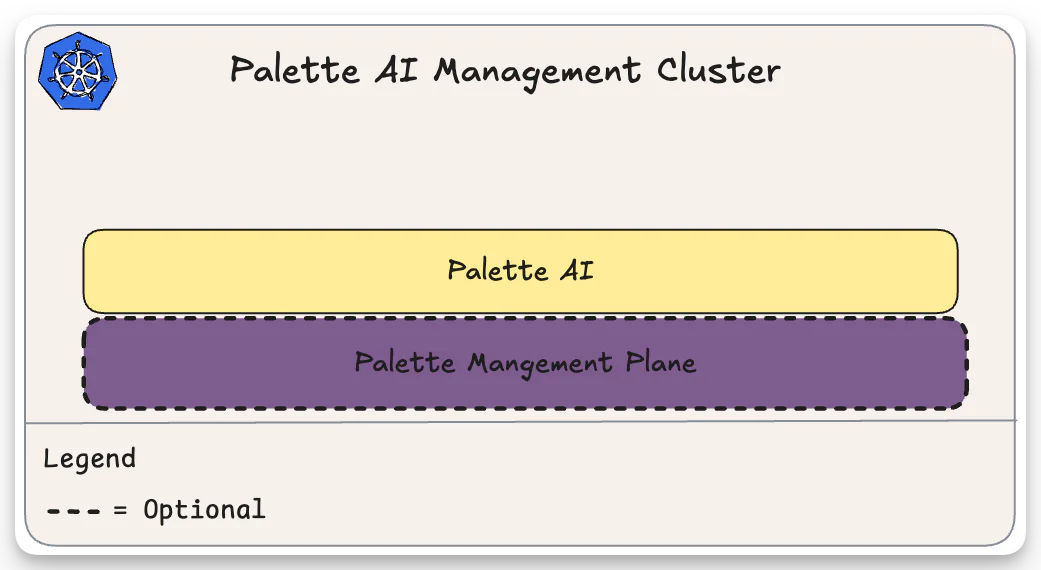

PaletteAI is built on top of Palette and leverages Palette's strengths to provide a seamless deployment and lifecycle management experience for data science teams. Palette is optional but recommended to help platform engineering teams manage the underlying infrastructure.

PaletteAI must be deployed on a Kubernetes cluster, preferably on the same cluster where self-hosted Palette is installed.

Programmatic Support

PaletteAI leverages Kubernetes Custom Resource Definitions (CRDs) to power the overall functionality of PaletteAI. This allows for programmatic support for PaletteAI through the use of the Kubernetes API. You can store and manage all PaletteAI resources through YAML files stored in a version control system, such as Git, and apply them to your PaletteAI instance through the use of the kubectl command or through a GitOps workflow.

Palette

Palette is the engine that drives the infrastructure provisioning for PaletteAI. Through Palette, platform engineering teams can configure bare metal devices to be used as available compute resources for PaletteAI. Palette addresses the different challenges encountered in constrained environments, such as Edge, airgap, and highly regulated environments, through the Palette VerteX edition. By delegating infrastructure provisioning and day-2 management to Palette, platform engineering teams can focus their attention on the application stacks rather than the underlying infrastructure.

Unfamiliar with Palette? Check out the What is Palette? page to learn more about Palette and its value proposition.

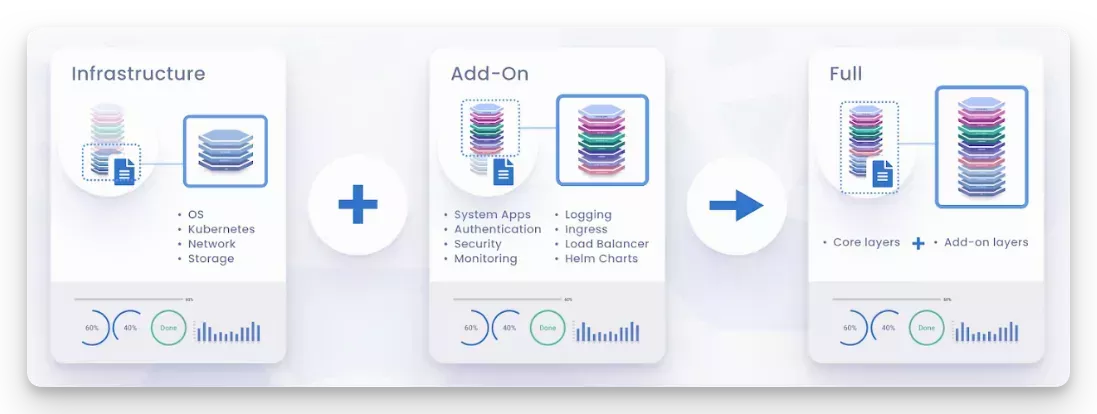

Palette manages the lifecycle of the existing and new Kubernetes clusters and the core infrastructure layers, including the Operating system, Kubernetes, networking, and storage.

Cluster Profile

Palette's cluster profile allows platform engineers the ability to fine-tune the cluster configuration to meet the needs of its users, development and data science teams alike. Palette uses the cluster profile to reconcile and ensure the required infrastructure software specified in the profile is installed on the cluster. Platform engineers can configure different versions of the cluster profile and roll out updates to the clusters as needed.

Check out the Cluster Profile section in the Palette documentation to learn more about Cluster profiles.

Device Information

Palette is able to gather information about the devices or machines that platform engineers want to use as available compute resources for PaletteAI. Information such as the device type, network interface, CPU, memory, GPU, GPU memory, and more is collected to help data science teams customize the ML platform stack. If Palette is unable to gather information about a device, platform engineers can manually assign a set of key tags to help PaletteAI identify the device correctly.

PaletteAI Engine

PaletteAI's engine, called Mural, is a platform that simplifies application modeling and lifecycle management by abstracting Kubernetes and cloud complexities to make it seamless to deploy applications consistently and repeatably. PaletteAI leverages Mural's WorkloadProfiles to manage the lifecycle of the non-core infrastructure software dependencies, including AI and ML frameworks and applications. Mural also allows for customized workload placement through the use of Environments. Through Mural, platform engineers can configure different environments for different teams to use, and enforce best practices and governance for the application stacks.

Not familiar with Mural? Check out the What is Mural? page to learn more about Mural and its value proposition.

Multi-Cluster Workload Placement

Mural comes with multi-cluster orchestration support that allows platform engineers the ability to customize the placement of workloads across different clusters. This is useful for PaletteAI, as it allows platform engineers to configure different environments for different teams to use or to customize hardware for different use cases, and allow end users the ability to create an isolated environment for their own use or use a shared environment that is shared across teams, without the need to worry about the underlying infrastructure.

Bring-Your-Own-Cluster (BYOC)

PaletteAI also supports existing clusters, created outside of Palette. This 'brownfield' or Bring-Your-Own-Cluster (BYOC) support is helpful in scenarios where cluster already exists and are managed by a different platform. Through Mural, PaletteAI can leverage and use those Kubernetes clusters and offer the same experience; platform engineers need only to enroll the cluster into Mural, and PaletteAI can use the cluster as an eligible environment for data science teams to use.

Workload Profiles

Mural's Workload Profiles is what PaletteAI uses to deploy ML and AI application stacks. A platform engineer can model for example, a Run:ai, or ClearML application stack that consumes the public Helm chart provided by the application vendor and add additional dependencies that are needed for the application to deploy correctly. Through components and traits, an ML application stack can be molded to ensure the correct configuration is applied to the application stack regardless of where it is deployed. Similar to Palette's cluster profile, Mural's Workload Profiles allows platform engineers to configure different versions of the workload profile and roll out updates to the deployed application stacks as needed.

Complete Picture

PaletteAI utilizes both Palette and its engine, Mural, to deliver a seamless experience for data science teams. Palette is used to deploy and manage the lifecycle of Kubernetes clusters. Palette also manages the core infrastructure layers through the cluster profile. Mural, is used to manage the lifecycle of non-core infrastructure software dependencies and AI/ML applications, thanks to the power of a Workload profile.

Mural also manages the various environments for data science teams to utilize, which enables PaletteAI to control the placement of the application stack granularly. In the event that a non-Palette-deployed cluster is used, BYOC, PaletteAI can still leverage that cluster as an eligible environment to use through Mural.

By combining the power of Palette and Mural, PaletteAI provides a seamless experience for data science teams to deploy and manage ML and AI applications stacks in production, abstracting away the complexity of deploying and managing the underlying infrastructure. This is what allows PaletteAI to be an self-service AI/ML application stack platform. At the same time, it offers platform engineering teams the ability to configure and manage the underlying infrastructure, application deployment, and required dependencies to ensure conformance to best practices and governance.

Resources

Check out the following resources to learn more about PaletteAI's concepts and components.